CSCI 3310

Operating Systems

Spring 2021

Instructor: Sean Barker

| Assigned: | Monday, March 1 |

| Groups Due: | Wednesday, March 3, 11:59 pm |

| Code Due: | Tuesday, March 16, 11:59 pm |

| Writeup Due: | Friday, March 19, 11:59 pm |

| Collaboration Policy: | Level 1 |

| Group Policy: | Pair-optional (recommended!) |

This project will give you experience writing a concurrent program using threads and synchronization primitives. Your task is to write a program that simulates an OS scheduler for disk requests. Each thread that wants to use the disk can issue requests to the disk scheduler concurrently with other threads. In addition to writing your scheduler, you will write a short report that discusses your technical design.

This writeup consists of two parts: the first part describes the thread library you will use, while the second part describes the disk scheduler that you will write (using the thread library).

This section describes the threading infrastructure for this project.

Rather than using a standard library like pthreads, you will use

a user-level thread library that is provided to you. Your disk scheduler will use this library

to create threads and manage synchronization.

The functions provided by the thread library are described below.

Each of these functions returns 0 on success and -1 on failure,

except for thread_libinit, which does not return at all on success.

int thread_libinit(thread_startfunc_t func, void* arg)

thread_libinit initializes the thread library. A user program should call

thread_libinit exactly once (before calling any other thread functions).

thread_libinit creates and runs the first thread. This first thread is

initialized to call the function pointed to by func with the single

argument arg. Note that a successful call to thread_libinit will not

return to the calling function. Instead, control transfers to func, and

the function that calls thread_libinit will never execute again.

int thread_create(thread_startfunc_t func, void* arg)

thread_create is used to create a new thread. When the newly created

thread starts, it will call the function pointed to by func and pass it the

single argument arg.

int thread_yield(void)

thread_yield causes the current thread to yield the CPU to the next

runnable thread. It has no effect if there are no other runnable threads.

thread_yield is used to test the thread library. A normal concurrent

program should not depend on thread_yield; nor should a normal concurrent

program produce incorrect answers if thread_yield calls are inserted arbitrarily.

int thread_lock(unsigned lock)

int thread_unlock(unsigned lock)

int thread_wait(unsigned lock, unsigned cond)

int thread_signal(unsigned lock, unsigned cond)

int thread_broadcast(unsigned lock, unsigned cond)

thread_lock, thread_unlock, thread_wait, thread_signal, and

thread_broadcast implement mutex locks and condition variables

with Mesa semantics in the thread library.

A lock is identified by an unsigned integer (0 - 0xFFFFFFFF). Each lock

may also have a set of condition variables associated with it (which are

numbered 0 - 0xFFFFFFFF). Thus, a condition variable is uniquely

identified by the tuple (lock number, cond number). Programs can use

arbitrary numbers for locks and condition variables (i.e., they need not

be numbered from 0 - n).

In addition to the thread library itself, you are also provided with an interrupt library that can generate interrupts (resulting in thread preemptions) in various ways. Remember that a thread can be preempted by an interrupt at any time, and thus a concurrent program must be robust to arbitrary preemption. The interrupt library is useful in stress testing that a concurrent program does not have synchronization bugs.

The interrupt library provides a single function for use by

application programs, start_preemptions, which is detailed below:

void start_preemptions(bool async, bool sync, int random_seed)

start_preemptions() can be used in testing to configure the generation

of interrupts (which in turn lead to preemptions).

The sync and async parameters allow several styles of preemptions:

1. async = true: generate asynchronous preemptions every 10 ms using

SIGALRM. These are non-deterministic.

2. sync = true: generate synchronous, pseudo-random preemptions before

interrupts are disabled and after interrupts are enabled by

the thread library. You can generate different (but deterministic)

preemption patterns by changing random_seed.

start_preemptions() should be called at most once in the application

function started by thread_libinit(). Make sure this is after the thread

system is done being initialized.

If start_preemptions() is not called, no interrupts will be generated.

Here is a short program that uses the above thread library, along with the

output generated by the program. Make sure you understand how the CPU is

switching between two threads (both in the function loop). The local variable i

is on the stack and so is private to each thread, while g is a global variable and

so is shared among the two threads.

#include <iostream>

#include <cstdlib>

#include <stdint.h>

#include "thread.h"

using namespace std;

int g = 0; // global (shared by all threads)

void loop(void* a) {

char* id = (char*) a;

cout << "loop called with id " << id << endl;

for (int i = 0; i < 5; i++, g++) {

cout << id << ":\t" << i << "\t" << g << endl;

if (thread_yield()) {

cout << "thread_yield failed\n";

exit(1);

}

}

}

void parent(void* a) {

int arg = (intptr_t) a;

cout << "parent called with arg " << arg << endl;

if (thread_create((thread_startfunc_t) loop, (void*) "child thread")) {

cout << "thread_create failed\n";

exit(1);

}

loop((void*) "parent thread");

}

int main() {

if (thread_libinit((thread_startfunc_t) parent, (void*) 100)) {

cout << "thread_libinit failed\n";

exit(1);

}

}

Running this program results in the output below:

parent called with arg 100 loop called with id parent thread parent thread: 0 0 loop called with id child thread child thread: 0 0 parent thread: 1 1 child thread: 1 2 parent thread: 2 3 child thread: 2 4 parent thread: 3 5 child thread: 3 6 parent thread: 4 7 child thread: 4 8 Thread library exiting.

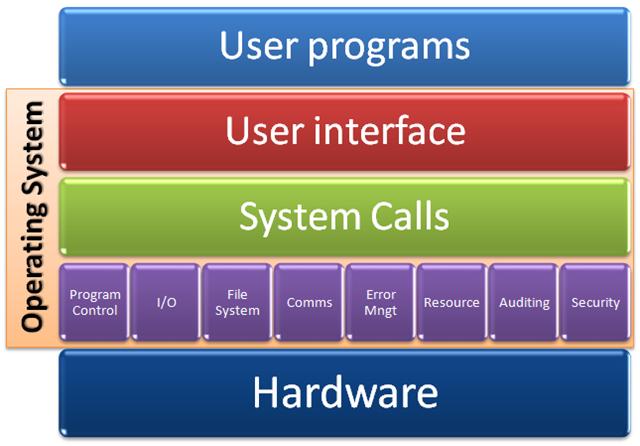

The disk scheduler in an operating system receives and schedules disk I/Os for multiple threads. The purpose of the disk scheduler is to provide concurrent but orderly access to the disk, as well as to ensure that all threads are able to complete their disk requests.

The disk consists of a set of tracks, representing particular locations on the disk. A disk request consists of a particuclar thread accessing a particular track number. For example, thread #5 might issue a request to access track #37. Once a request is made by a thread, that thread is normally blocked until the disk scheduler completes its request (synchronous I/O). The queue of pending disk requests can contain at most a particular number of requests; if the request queue is full, threads must wait to issue new requests.

Servicing a disk request consists of moving the disk head to the desired track. Since the disk head is a physical component of the disk, at any given time, the head is located at a particular track number of the disk. Servicing a request thus requires moving the disk head from the current track to the track of the next request. This movement is called seeking.

Note that requests in the disk queue are typically NOT serviced in FIFO order. For this project, your scheduler will always choose to service the request that is closest to the current track. This scheduling order is called Shortest Seek Time First (SSTF).

Your program should start by creating a specified number of requester threads to issue disk requests and one servicer thread (i.e., the actual scheduler) to service disk requests. Each requester thread should issue a series of requests for disk tracks as specified by an input file. Each request is synchronous; a requester thread must wait until the servicing thread finishes handling its last request before issuing its next request. A requester thread finishes (i.e., exits) after all the requests in its input file have been serviced. The servicer thread continues until all requester threads have finished.

The servicer thread should handle requests in SSTF order, with the disk initialized to a starting track of 0. Additionally, in order to minimize average seek distance, your servicer thread should keep the disk queue as full as possible. To do so, the servicer thread should only actually handle a request when the disk queue is full, or when all remaining requester threads have an outstanding request in the queue. This approach gives the servicer thread the largest number of requests to choose from (thus minimizing seek time).

Your program will be called with a variable number of command-line arguments. The

required first argument is an integer specifying the maximum number of requests that

the disk queue can hold. Each additional argument specifies the input file to be

used by a requester thread (and thus, the number of additional arguments determines

the initial number of requester threads). For example, if the scheduler executable

is called disk, then it could be executed as follows:

./disk 2 disk.in0 disk.in1 disk.in2

In the above example, the size of the request queue is 2, and there are three

requester threads, reading from disk.in0, disk.in1, and disk.in2,

respectively.

The format of a requester input file is simple: each line of the input file specifies the track number of the next request, in the range 0 to 999. Your program may assume that all input files are formatted correctly.

Requester threads are assigned sequential IDs starting from zero, in the order they were given on the command line (e.g., requester 0 reads from disk.in0 in the above example).

After issuing a request, a requester thread should generate output exactly as follows (note the spaces in the strings):

cout << "requester " << requester_id << " track " << track << endl;

Once this line is printed, the request is in the queue and available to be handled by the servicer thread.

After handling a request, the servicer thread should generate output exactly as follows (again note the spaces in the strings):

cout << "service requester " << requester_id << " track " << track << endl;

Once this line is printed, a request is considered to be out of the request queue.

Your program should not generate any other output except for these lines.

Here is an example set of input files (disk.in0 through disk.in4). These sample input files are also included in the project starter files.

disk.in0 disk.in1 disk.in2 disk.in3 disk.in4 -------- -------- -------- -------- -------- 53 914 827 302 631 785 350 567 230 11

Here is one of several possible correct outputs from running the disk scheduler with the following command:

$ ./disk 3 disk.in0 disk.in1 disk.in2 disk.in3 disk.in4 requester 0 track 53 requester 1 track 914 requester 2 track 827 service requester 0 track 53 requester 3 track 302 service requester 3 track 302 requester 4 track 631 service requester 4 track 631 requester 0 track 785 service requester 0 track 785 requester 3 track 230 service requester 2 track 827 requester 4 track 11 service requester 1 track 914 requester 2 track 567 service requester 2 track 567 requester 1 track 350 service requester 1 track 350 service requester 3 track 230 service requester 4 track 11 Thread library exiting.

Note that the final line of the output above is produced by the thread library itself, not the disk scheduler program.

Before you start writing your scheduler, make sure you fully understand the operation of the thread library described in part 1 and the example program given there. Ask questions if you aren't sure!

You should also plan in advance how you will implement the synchronization logic in your program (e.g., what the locks and condition variables will be and how they will be used by the various threads).

While writing your scheduler, you may find the following specific tips useful:

ifstream rather than fstream).start_preemptions as described in part 1 of the writeup. While running with preemptions does not guarantee that your program is correct, it makes it much more likely that synchronization bugs will be exposed.valgrind to check for memory safety issues, you are likely to encounter a pile of warnings that

may not make sense. Valgrind gets confused by the user-level thread context switches that happen in the thread library, and therefore

outputs erroneous warnings. While it is possible to add in Valgrind-specific code hooks to address this, doing so is beyond the scope of the project. Therefore, Valgrind is likely to be of limited use for this project.As for Project 1, starter code will be distributed via GitHub. GitHub repositories will be made available once groups are assigned (each group will share one repository).

As this is the first group project, you may be making more extensive use of git for collaborative development than in the past. If you haven't done so previously, it is a good idea to go through Part 3 of the Git tutorial, which covers some specific topics applicable to collaboration (most significant of which is handling merge conflicts).

The starter code in your repository includes the thread library files thread.o and libinterrupt.a, a header file for the thread functions thread.h, sample input files disk.in0 through disk.in4, the scheduler source code file disk.cc, and a Makefile that will compile the program using the thread library files. Write your code in disk.cc and do not modify any other source files. You are also encouraged to create your own test input files beyond those provided.

The starter code includes two versions of the thread library files: the primary set compiled for Linux, and a second set compiled for macOS. The provided Makefile will automatically build against the correct thread library depending on the host system. The macOS library is provided if you wish to use it, but as usual, the only officially supported platform is the class Linux server. Always make sure you test on the class server before submitting!

When writing your code, you may use any functions included in the standard C++ library, including (and especially) the STL. You should not use any libraries other than the standard C++ library.

You can submit your program to the autograder as follows:

submit3310 2 disk.cc

Remember that autograder submission limits are per group, not per user. Autograder submissions made by any member in a group will be sent to all group members and count as the single daily submission (or one of the bonus submissions).

In addition to writing the program itself, you will also write a short paper (~2-3 pages) that describes your scheduler. The purpose of this writeup is to help you gain experience with technical writing. In particular, your paper should include the following:

It is important to keep in mind that the writeup is a supplement (not a substitute) for the code itself. Strive to minimize any degree to which your writeup is 'code-like'. Instead, you should approach the writeup like a technical paper (providing clear, prose explanations that can be supplemented by reading the code). You need not try to include every technical detail in the writeup; focus on the major challenges and design decisions as you might explain them to another computer science student.

Upload your writeup as a PDF to Blackboard by the writeup deadline. You only need to submit one copy of the writeup per team. Typesetting your writeup in LaTeX is encouraged but not required.

Your project will be graded on program correctness, design, and style, as well as the quality of your project writeup. Remember that the autograder will only check the correctness of your program, nothing else!

You can (and should) consult the Coding Design & Style Guide for tips on design and style issues. Please ask if you have any questions about what constitutes good program design and/or style that are not covered by the guide.