Greenbar Syndrome and Adaptable Systems

Early on in my career in IT, I was working with a person, Roger, who had been in the technology field for many years. He had a lot of experience and tremendous computing knowledge and was a person I learned a great deal from in the time we worked together. He was on a project team with me, the goal of which was to create an online reporting system for our student records database. At this time XML (Extensible Markup Language) was rather new and we had chosen it to become the backbone of our online delivery mechanisms.

As institutions look to expose their datasets they face obstacles from two very different sources: people that do not appreciate the value of opening up the data, and people that are so used to this openness they can't believe not every system is ready for this.

As we began using this new technology Roger repeatedly asked “How does this make our lives easier?” I and other people on the team would point out things like the capacity for XML to present discreet data sets in multiple formats and he would nod as though he might agree, but later he would say something like: “This is just making our work harder. How is XML helping us?”

This actually went on for quite a few weeks as we worked on the project and I was confused by Roger's continued skepticism. To me the advantages were clear and I felt like we had discussed these advantages thoroughly, but the arguments never really seemed to win Roger over. Eventually it became obvious that there was a fundamental difference in how we were envisioning our work. I recall at one meeting, Roger again asked “How is this making our lives easier?” and I responded somewhat irritably “It isn't. It is making our clients' lives easier.”

And that is when it hit me. There had been a fundamental shift in how data could (and should) be shared that was keeping Roger and I from connecting.

Now, it certainly wasn't the case that Roger was unconcerned about client happiness. Roger was a diligent technician with a lengthy track record of going above and beyond for the institution. But Roger established himself in the field in an era when computing was far less integrated into the average person's life. For most of his tenure, clients didn't actually work directly with data. The most common way for clients to receive data was in the form of massive print-outs delivered at particular times of the year, month, or week. Clients did not have any hands-on activity in regards to these reports. They merely specified the information they wanted and technicians like Roger would write brillant algorithms and queries to fulfill these sometimes almost impossible requests (frequently, the report-generators like Roger actually knew far more about the data and the work proccesses than the people who received them).

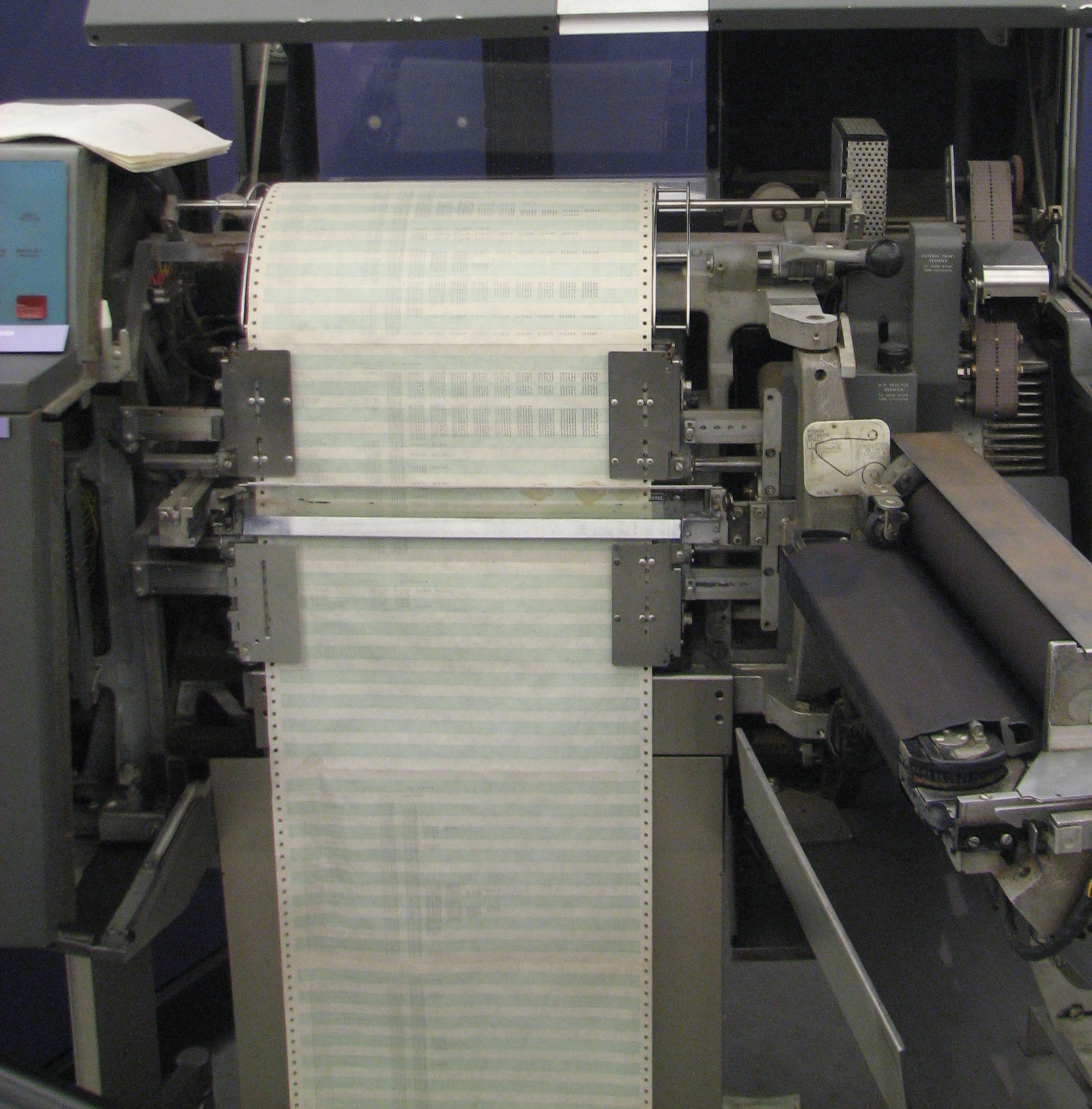

That we, as technologists, should actually turn this process of report-generation over to the clients, was completely foreign to Roger. He knew that most of his clients didn't actually understand their data well enough to form reliable reports. He felt the client would be far better-served making requests to the experts and then waiting the appropriate and reasonable amount of time for the proper and most correct report to be produced. Reports from this era were typically printed out by large line printers onto what was called green bar printer paper, so as I was thinking about Roger's perspective, I took to calling this way of thinking as Greenbar Syndrome.

A line printer printing on traditional green bar paper

People with Greenbar Syndrome, as I came to envision it, may have the following characteristics:

- Skepticism about the general user's ability to correctly interpret data

- A resistance to self-service models

- Disinterest in expanding connections (tying your data with other data sources)

I had actually seen another form of Greenbar Syndrome earlier while working in a library setting. When Internet search engines first started becoming viable ways to find information, most librarians were horrified at this new method by which people were researching their studies. Google searches were classic keyword searches and professional data-retrieval experts that librarians are, they knew this was only one—and frequently the far from optimal—way of finding information. Librarians knew important vocabulary, relevant sources, and the best techniques. Why, they wondered, would people abandon all this for the crudest possible search interface which was guaranteed to produce such haphazard results?

The answer (for both the librarians and Roger) was that users had come to expect more. They expected to be able to do this work on their own without the guidance of the professionals that had overseen this data retrieval in the past. To Roger and the librarians, it seemed like users were willingly accepting an inferior product for the convenience of being able to do the work themselves whenever and wherever they wanted. In some ways that was true, but to put a more optimistic spin on it: The number of ways in which people now envisioned working with data had grown beyond what the traditional reporting mechanisms could support. And while it was true that sophisticated searching often remained beyond the capabilities of regular users, many simple searches and data queries that before would have required submitting a request to the data experts could now be performed on the fly. Users expected to be able to do at least some degree of data retrieval on their own. This is a fundamental shift in data management which many institutions are still coming to terms with.

An important consequence of this shift of data retrieval from the hands of the few experts into the hands of the less trained, is that data sets have had to become more rigid. For example, in the past if you were a data steward for a local historical society and you knew that all your object records used a unique shorthand notation (maybe set up by someone who volunteered there 40 years ago) to track provenance, that was fine because you administrated all of the outgoing reports and could translate them for the end user before they were published or otherwise disseminated. But once your records go online, this becomes an issue for the end users. At the least you will need to provide users with a key to translate this shorthand, but eventually your online users will expect your data to follow more traditional standards and if your data interface continues to mystify them, they will probably just go somewhere else.

Almost everyone can appreciate the experience of someone describing a system that works perfectly well for you as utter chaos. You might have a closet, attic, junk drawer, or purse from which you can easily retrieve any item requested, while others will fish and sort for minutes (hours). The difference of opinion, of course, doesn't actually come from the quality of the system, but rather in the adaptability of that system. If the goal of the system is to make it easy for you to retrieve an object and you can, then that is a quality system. But it isn't necessarily adaptable. If someone else happens upon your storage repository—how quickly can they find something? That is the new question being asked of our online data stores. It is no longer sufficient that everything is accounted for and correctly identified so that it can be found by the system experts. We now (within reason) expect that anyone can stumble upon a system and, making a reasonable effort, find at least the top level data we are exposing to the public.

Two mistakes organizations often make when wanting to move to a more adaptable system are thinking that:

- An unadaptable system isn't a quality system.

- A quality system can be easily converted into an adaptable system

Several years ago, my institution wanted to have a campus-wide events calendar put online. What they had to work with was a piece of room scheduling software that was used for every event. It had just about all the logistic information about an event so it was a natural choice to backend the first online calendar we created. This software was built to track room usage so it was great at showing When and Where. But it was not well-designed for the public consumption in many other ways. In regards to the Who, it was mostly concerned about the administrator that was booking the room, so by default, instead of showing something like "Sponsored by the Film Society" it would say "Owned by the [the film society's administrative coordinator's name]. It provided almost nothing about What the event was or Why it might be worth going to. But, interestingly, the powers that be that wanted this online calendar could not believe it wasn't the easiest thing in the world to take this room scheduling system and transform it into a public-facing calendar! The project languished for years as people tried to find a solution, and people began to lash out at the room scheduling software calling it antiquated and poorly written. In particular, and most interestingly, what people fixated on was the software's online interface. They lamented the poor search features, lack of images and contextual information, and an inability for event owners to make updates. But, of course, that was not simply an interface issue—the entire system was being asked to do something it was never built to do. The slickest of interfaces would not have helped room scheduling data look more like event calendar data.

It wasn't until we actually took concrete steps towards adaptability that we moved to a viable solution. Some steps included:

- Standardizing data entry (controlled vocabularies)

- Opening up the system to allow more event owners access

- Cleaning up areas that had been neglected

- Expanding data sets where possible (such as describing users more fully so we could list departments as event owners instead of administrators)

- Finding areas where the system failed to meet expectations and building out new features

This was no small task, but fortunately, the institution eventually realized that the endeavor was going to require this kind of effort and dedicated the resources needed to make it a success. We were able to extend a quality system so that it became a quality-adaptive system.

As more and more institutions look to move their datasets out into the world of big data they face the problems outlined in this article which come from both ends of the spectrum. On one side are the people that do not completely see the value of opening up the data for general use and interpretation. On the other side people that are so used to this openness in other parts of their lives that they can't believe converting a system into an adaptable model can be all that challenging. Each side brings its unique challenges and before attempting to expose your data, it is worthwhile to stop and assess where your data fits in terms of quality and adaptability and present that state of affairs to all interested parties.